How to stop the onion denial (of service)

As you might have heard, some onion services have been experiencing issues with denial-of-service (DoS) attacks over the past few years.

The attacks exploit the inherent asymmetric nature of the onion service rendezvous protocol, and that makes it a hard problem to defend against. During the rendezvous protocol, an evil client can send a small message to the service while the service has to do lots of expensive work to react to it. This asymmetry opens the protocol to DoS attacks, and the anonymous nature of our network makes it extremely challenging to filter the good clients from the bad.

For the past two years, we've been providing more scaling options to onion service operators, supporting more agile circuit management and protecting the network and the service host from CPU exhaustion. While these don't fix the root problem, they provide a framework to onion service operators to build their own DoS detection and handling infrastructure.

Even though the toolbox of available defenses for onion service operators has grown, the threat of DoS attacks still looms large. And while there is still a bunch of smaller-scale improvements that could be done, we believe that this is not the kind of problem that a parameter tweak or small code change will make it disappear. The inherent nature of the problem makes us believe that we need to make fundamental changes to address it.

In this post, we would like to present you with two options that we believe can provide a long-term defense to the problem while maintaining the usability and security of onion services.

The intuition to keep in mind when considering these designs is that we need to be able to offer different notions of fairness. In today's onion services, each connection request is indistinguishable from all the other requests (it's an anonymity system after all), so the only available fairness strategy is to treat each request equally -- which means that somebody who makes more requests will inherently get more attention. The alternatives we describe here use two principles to change the balance: (1) the client should have the option to include some new information in its request, which the onion service can use to more intelligently prioritize which requests it answers; and (2) rather than a static requirement in place at all times, we should let onion services scale the defenses based on current load, with the default being to answer everything.

Defenses based on anonymous tokens

Anonymous tokens are hot lately, and they fit Tor like a glove. You can think of anonymous tokens as tickets, or passes that are rewarded to good clients. In this particular context, we could use anonymous tokens as a way to prioritize good clients over malicious clients when a denial of service attack is happening.

A major question here is how good clients can acquire such tokens. Typically, token distribution happens using a scarce resource that a DoS attacker does not have ample: CAPTCHAs, money, phone numbers, IP addresses, goodwill. Of course, the anonymous nature of our network limits our options here. Also, in the context of the DoS problem, it's not a huge deal if an evil client acquires some tokens, but it's important that they are not able to acquire enough tokens to sustain a DoS attack.

One reasonable approach for bootstrapping a token system is to setup a CAPTCHA server (perhaps using hCaptcha) that rewards users with blinded tokens. Alternatively, the onion service itself can reward trusted users with tokens that can later be used to regain access. We can also give users tokens with every donation to the Tor Project. There are many different types of tokens we can use and different user workflows that we can support. We have lots of ideas on how these tokens can interact with each other, what additional benefits they can provide, and how the "wallets" could look (including Tor Browser integration).

An additional benefit of a token-based approach is that it opens up a great variety of use cases for Tor in the future. For example, in the future tokens could be used to restrict malicious usage of Tor exit nodes by spam and automated tools hence reducing exit node censorship by centralized services. Tokens can also be used to register human-memorable names for onion services. They can also be used to acquire private bridges and exit nodes for additional security. Lots of details need to be ironed out, but anonymous tokens seem like a great fit for our future work.

In terms of cryptography, anonymous tokens at their basic form are a type of anonymous credential and use similar technology and UX to PrivacyPass. However, we can't use PrivacyPass as is, because we want to be able to have one party issue tokens while another party verifies them -- a functionality not currently supported by PrivacyPass. For example, we want to be able to setup a token issuing server on some separate or independent website, and still have onion services (or their introduction points) be able to verify the tokens issued by that server.

Of course, when most tech people hear the word "token" in 2020, their mind directly skips to blockchains. While we are definitely monitoring the blockchain space with great interest, we are also cautious about picking a blockchain solution. In particular, given the private nature of Tor, it's hard finding a blockchain that satisfies our privacy requirements and still provides us with the flexiblity required to achieve all our future goals. We remain hopeful.

Defenses based on Proof-of-Work

Another approach to solving the DoS problem is using a Proof-Of-Work system to reduce the computational asymmetry gap between the service and the attacker.

Another approach to solving the DoS problem is using a Proof-Of-Work system to reduce the computational asymmetry gap between the service and the attacker.

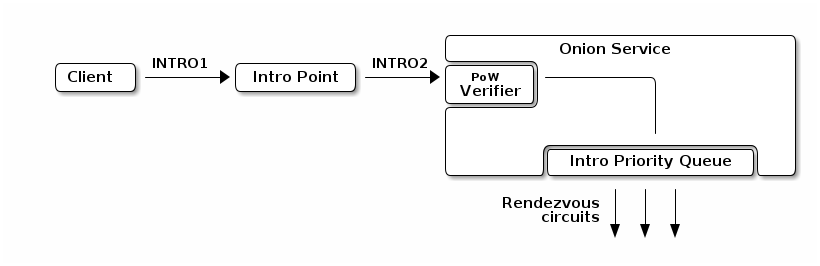

In particular, onion services can ask the client to solve a Proof-of-Work puzzle before allowing entry. With the right Proof-of-Work algorithm and puzzle difficulty, this can make it impossible for an attacker to overwhelm the service, while still making it reachable by normal clients with only a small delay. Similar designs have also been proposed for TLS.

We have already started writing a proposal for this system (with the great help of friends and volunteers) and we are reasonably confident that existing proof-of-work algorithms can fit our use case while providing the right level of protection. Given the complexity of the system there is still work to be done on the parameter tuning as well as on tuning the Tor introduction scheduler, but initial analysis seems very fruitful.

The great benefit of this approach is that the proposed PoW system is dynamic and automatically adapts its difficulty based on the volume of malicious activity that is hitting the service. Hence, when there is a big attack wave the difficulty increases, but it also scales down automatically when the wave has passed. Furthermore, if we pick a proof-of-work system where doing more work creates a better proof, motivated clients can "bid" for attention by spending time to create an extra-good proof, which would let the onion service prioritize it over the other requests. This approach turns the asymmetry to our advantage: good clients make a small number of connections and they need a way to stand out amid an attacker pretending to be many clients.

Conclusion

We believe that the above two schemes provide a concrete framework that will strengthen the resilience of onion services in a fundamental way.

Both proposals can be applied together and they are complementary to each other. It's worth pointing out that both proposals are technically and cryptographically heavy and have their own drawbacks and limitations. Nobody wants a Tor network full of CAPTCHAs or mobile-prohibiting PoW puzzles. Parameter tuning and careful design is vital here. After all, DoS-resilience is a game of economics: the goal is not to be perfect; the goal is to raise the bar enough so that the attacker's financial cost of maintaining an attack is higher than the gain. Or said another way, the steady-state we want to reach is that you typically don't need to present a captcha or token to visit an onion service, and the reason is that nobody is attacking them because the attacks don't work.

We hope that our post inspired you to think about our ideas and come up with your own attacks and improvements. This is not a problem we can solve over a day, and we appreciate all the research and help we can get. Please get in touch!

Supporting Tor

Making contributions to the Tor Project can help us solve this problem. These efforts are not easy or quick, and as a result, they are not cheap. We can't implement these solutions with the Bug Smash Fund alone, but a contribution during the month of August will go towards this effort and others like it. Thank you for supporting Tor!

Comments

Please note that the comment area below has been archived.

Great! I like idea with…

Great! I like idea with Defenses Based On Anonymous Tokens. Fingers crossed! Time to send another donation.

You can develop any of those…

You can develop any of those proposed solutions, but you still didn't fix the base. I don't know why you guys don't wanna do it.

- An attacker does not need to make a circuit to the redezvous point. Just send a random IP later as Intro2 cell.

- An attacker can still make two-hop circuit to the intro point.

>Alternatively, the onion…

>Alternatively, the onion service itself can reward trusted users with tokens that can later be used to regain access.

Isn't this already being done? Some onion sites give you alternative addresses to use after you have set up an account. Different addresses use different introduction points, right? So the idea is to segment users and spread the attack surface across more of the network, as I understand it. I imagine sites could even generate a unique address for each user account.

*bump* (sorry, but I'm…

*bump* (sorry, but I'm really interested in the answer to this)

Hello and sorry for the late…

Hello and sorry for the late reply.

Giving a unique address to each of your users is kinda the same as giving an access token to each of your users in terms of UX. However, it's not the same in terms of security (each of those addresses is exposed to a different set of guard nodes and intro points). It's also not the same in terms of network utilization (each of those onions upload new descriptors and use new intro points). Hence it's better for the network and for onion security to have a single onion service instead of multiples.

> Alternatively, the onion…

> Alternatively, the onion service itself can reward trusted users with tokens that can later be used to regain access.

CAPTCHAs are for web browsers. Would the onion service be able to reward tokens to torified client programs that are not browsers?

> We can also give users tokens with every donation to the Tor Project.

So wealthy and well-funded attackers could exchange money for leniency or permission like the commercialization of Catholic indulgences?

https://en.wikipedia.org/wiki/Indulgence

> For example, in the future tokens could be used to restrict malicious usage of Tor exit nodes by spam and automated tools hence reducing exit node censorship by centralized services.

And network researchers? Will tokens restrict auditing other aspects of Tor protocols and the network, leaving orthogonal bugs undetected?

> Tokens can also be used to register human-memorable names for onion services.

Namecoin has similar vulnerabilities to Bitcoin as well as its learning curve. It sounds outside the scope of the Tor Project.

> Lots of details need to be ironed out

Yes.

> anonymous tokens at their basic form are a type of anonymous credential and use similar technology and UX to PrivacyPass.

Like CAPTCHAs, PrivacyPass is for web browsers and not for torified client programs in general. Furthermore, support for PrivacyPass tokens by a client program is an identifier for fingerprints until the client is in a large group of clients that have identical support.

> it's hard finding a blockchain that satisfies our privacy requirements and still provides us with the flexiblity required to achieve all our future goals.

I am so happy Tor Project is evaluating privacy of blockchains.

> a Proof-Of-Work system to reduce the computational asymmetry gap between the service and the attacker.

If it turns out well, I hope it could be adapted for the clearnet, or released as a library, or generalized into a lower OSI layer.

> mobile-prohibiting PoW puzzles

I'm so happy you're thinking of this.

I'm enlarging this because it needs emphasis.

> So wealthy and well-funded…

> So wealthy and well-funded attackers could exchange money for leniency or permission like the commercialization of Catholic indulgences?

I agree that this seems like a good analogy. I often cannot imagine why TP is even considering something it is considering. The really scary question is what I would think about the decisions they make WITHOUT "consulting" the users.

Hello, discussions and…

Hello,

discussions and brainstorming on possible DoS solutions have been ongoing on our mailing lists for years. We are interested in user feedback and that's why we do all our development in public and also write these blog posts.

Well-funded attackers can…

Well-funded attackers can always do DoS, though. Whatever resource it takes to impersonate a horde of genuine users, they can always buy it. If they have to, a sufficiently well funded hacker can actually hire a bunch of real people to go attack a service, either by overloading it or by doing something else, like say trolling a forum into nonexistence.

That may be unfair, but it's a form of unfairness that cannot be fixed, so it doesn't pay to get too hung up on it. In most DoS situations, the best you can do is to shut down the poorly funded attackers, and make the well funded ones spend more money than they think the attack is worth.

>> mobile-prohibiting PoW…

>> mobile-prohibiting PoW puzzles

One point to bear in mind there is that many people (not me alas) have other options for mobile phones, e.g. Signal.

Of course, if FBI Director Wray gets his way, both Tor and Signal will become illegal inside the USA. (EFF confirms the second but has said nothing about the first.)

Hello, here is a bunch of…

Hello,

here is a bunch of replies to your comments:

it should be possible to insert tokens to a torified client program that is not a web browser. This could be done using the filesystem or using the control port or using some other mechanism.

If we give out tokens with Tor Project donations (and I'm saying if because this is an idea that needs more thinking), we don't expect that adversaries will be able to use those tokens to launch attacks against onion services.

Also, we don't expect tokens or PoW to restrict research by security people. Security people have various other ways to penetration test Tor in more effective ways, such as setting up private Tor networks (see chutney).

We are aware that Namecoin has its own problems, but all secure name systems have their own problems. We don't think Namecoin has a big learning curve for regular users; but perhaps it does for domain registrations. We don't think that solutions like Namecoin or other secure name systems are outside the scope of Tor project at all.

And yes definitely lots of details need to be ironed out both for tokens and the PoW approaches.

Thanks for your comment!

> it should be possible to…

> it should be possible to insert tokens to a torified client program that is not a web browser. This could be done using the filesystem or using the control port or using some other mechanism.

Wouldn't such tokens be handled by the Tor client itself? Otherwise every app you want to torify will have to be modified to add support for tokens. I really hope this can happen below the app layer.

Agreed. If the token…

Agreed. If the token insertion is done over the control port or using the Tor client filesystem directory this would be below the app layer.

Something's broken in the…

Something's broken in the comment system. When I submit a comment, clicking the first Preview button takes me to a page rendering my comment and the edit box above the blog post, and the next Save and Preview buttons are past the blog post at the very bottom of the page.

Yep it's not a nice…

Yep it's not a nice interface. We really need a new blogging platform :/

I am also seeing that.

I am also seeing that.

For the love of God, please,…

For the love of God, please, NO CAPTCHAS. Not for anything, ever.

They're horribly annoying for interactive users.

Software for solving them improves periodically, leading to unpredictable disruption

The ones that are hardest for software to solve are insoluble for some ACTUAL HUMANS (actually ALL of them are insoluble for SOME actual humans). And let's not forget that people using Tor may be inhibited in who they can ask for help. It'd probably be a lot less discriminatory to just ask for money.

Speaking of which, CAPTCHAs can be defeated for Mere Money(TM) by hiring people to solve them. This has been done in practice.

They preclude automated use cases, and there is zero reason to think that there aren't or won't be important automated use cases for hidden services.

Please, PLEASE do not bake CAPTCHAs into the network, and do not rely on anything outside of the network being able to use them either.

> Please, PLEASE do not bake…

> Please, PLEASE do not bake CAPTCHAs into the network, and do not rely on anything outside of the network being able to use them either.

Plus one.

Sometimes I wonder if some Tor devs have ever actually used Tor. Sorry. I know you are very busy, but proposing to bake in CAPTCHAs is just.... so weird that it makes me wonder semi-seriously whether CIA or GRU broke into the blog and took it over.

Hello and thanks for your…

Hello and thanks for your comment.

Nobody likes CAPTCHAs and as we said in the blog post we don't want Tor to become a CAPTCHA world.

In the case of DoS, CAPTCHAs will only be enabled by the onion service operator if the operator believes that the site would be unreachable otherwise. I think it's fair to let the operator choose, and I think that a site being totally unreachable is strictly worse than having the option to solve a CAPTCHA to get in.

@asn: > As you might have…

@asn:

> As you might have heard, some onion services have been experiencing issues with denial-of-service (DoS) attacks over the past few years. The attacks exploit the inherent asymmetric nature of the onion service rendezvous protocol, and that makes it a hard problem to defend against. During the rendezvous protocol, an evil client can send a small message to the service while the service has to do lots of expensive work to react to it. This asymmetry opens the protocol to DoS attacks, and the anonymous nature of our network makes it extremely challenging to filter the good clients from the bad.

Hang on, I am very confused. So the bad guy's Tor client sends a SMALL message to the rendezvous node, which has to do a lot of work to react... but do good guy Tor clients ALSO send such SMALL messages when they want to use an onion service? Can the small size be detected by the rendezvous point BEFORE doing the expensive work? Is the expense a handshake which must be completed BEFORE the rendezvous point sees even the encrypted SMALL message?

> the client should have the option to include some new information in its request, which the onion service can use to more intelligently prioritize which requests it answers

Wouldn't that help attackers deanonymize users?

> You can think of anonymous tokens as tickets, or passes that are rewarded to good clients. In this particular context, we could use anonymous tokens as a way to prioritize good clients over malicious clients when a denial of service attack is happening.

What is the definition of "malicious"? Do you have reason to be confident that your definition includes only persons who are definitely performing a DDOS on the Tor network? How can you be sure this will not penalize people who use Tor for innocuous purposes such as reading about a former USN officer who was just arrested on a luxury motor yacht owned by an alleged billionaire and accused rapist? If such innocuous people use Tor to communicate just like the just arrested bad guy (reading between the lines of the indictment), given Tor's almost nonexistent user feedback, how would good guy users even complain they are (i) not attacking the Tor network (ii) unfairly being treated as if they are attacking Tor?

Also, of course, the GRU, the FBI, my parakeet, my cat, the neighborhood coyote, etc all have very different definitions of "bad guy". In this context the definition should fit only persons who are actually knowingly carrying out a DDOS attack on Tor.

> One reasonable approach for bootstrapping a token system is to setup a CAPTCHA server (perhaps using hCaptcha) that rewards users with blinded tokens.

Many users including me just give up when we are demanded (previously only by non-Tor sites) to complete CAPTCHAs, because we have learned that the bastards are never satisfied with one but just keep endlessly demanding another.

> Alternatively, the onion service itself can reward trusted users with tokens that can later be used to regain access

How on earth can you recognize "trusted users" without deanonymizing them? For the matter, it seems that even in the clearweb, websites cannot automatically distinguish bad guys (e.g. spreading disinformation) from good guys.

> We can also give users tokens with every donation to the Tor Project.

Does that mean that you will no longer accept non-electronic donations? That users who do not use email will no longer be able to use Tor?

> Another approach to solving the DoS problem is using a Proof-Of-Work system to reduce the computational asymmetry gap between the service and the attacker.

Is it correct to say that PoW will slow down initial connections to an onion (e.g. Duckduckgo search engine, or Secure Drop, yes?) but will not involve any attempt (obviously doomed to fail) to define and distinguish between and treat differently different real life identities behind Tor clients? That PoW works by requiring all clients to do some hard work each time they connect to an onion, but legit users can tolerate, but spammers and DDOS bots cannot because of their enormous volume of many SMALL messages?

The first approach sounds discriminatory and unworkable to me. The second approach might be workable if the answer to the questions in the previous paragraph is "yes".

A few more questions: is this DDOS the reason why Duckduckgo onion is suddenly performing very badly? (I first noticed that yesterday.) Why Tails does not use "always prefer onions" by default? Why I have notice more problems using Tor Browser under Tails in past two days? Why this blog is more reluctant than usual to accept submitted comments? Do we have any idea whether this is a government or an ordinary cybercriminal gang doing the DDOS?

> So the bad guy's Tor…

> So the bad guy's Tor client sends a SMALL message to the rendezvous node, which has to do a lot of work to react... but do good guy Tor clients ALSO send such SMALL messages when they want to use an onion service?

I think legit packets are small too. The bottleneck here is computation, not network througput, so the size of the message really isn't relevant. I think they just wanted to note that these packets are small and don't take a lot of bandwidth for an attacker. I'm not entirely sure either though.

>> the client should have the option to include some new information in its request, which the onion service can use to more intelligently prioritize which requests it answers

>Wouldn't that help attackers deanonymize users?

It's just crypto information, for example, it might say "Proof that I did X amount of work", in that case when an intro point is under attack it will accept the highest values of X first, and drop lower values of X that it doesn't have time for. But yes, we always need to be careful of subtle information leaks.

> What is the definition of "malicious"?

Ideally we won't have to define it at all. The onion service, or maybe even the individual intro points themselves, will decide when they are at their max capacity, and begin to prioritize clients accordingly.

The idea here is **not** necessarily to make onions accessible by legitimate users during an attack. The idea is to make attacks more expensive so that they are less practical and sustainable, and therefore so attacks occur less frequently.

> How on earth can you recognize "trusted users" without deanonymizing them?

That's totally up to the onion service. Most likely it would mean users who have a well-established account with you. Think of it like giving those users a VIP pass they can use to skip the line next time they visit.

> Is it correct to say that PoW will slow down initial connections to an onion

Yes, but only when the onion service (or intro point) is at its maximum capacity. Most of the time it will continue to operate like it does now. It's economics, when the demand begins to exceed the supply, the computational "cost" goes up. The more the cost rises, the faster an attacker will run out of money.

When a new edition of Tails…

When a new edition of Tails comes out, I use torify curl to download. Would that be interpreted by your robot algorithm as making my Tor client a "bad" client? I really do feel that I have good reason to be very careful in downloading and verifying this ISO image and I only do this for Tails, once every two months or so. It should not make me be considered a "bad guy" particularly since I send you are large fraction of my modest wealth.

Don't worry. We are not…

Don't worry. We are not planning to kill such reasonable use cases.

Thanks for the reply (I am…

Thanks for the reply (I am honored in a way) but don't you see what is wrong with what you said?

What the worried users (me and others) want TP to say is "we are planning to ensure that users not doing DDOS will NOT be excluded and that anyone who experiences issues will be able to anonymous report the problem and request redress".

What you said is that TP is "not planning to kill reasonable use cases". But without a human-comprehensible definition of "reasonable" this is a nondenial denial.

Hello. You presented a use…

Hello. You presented a use case: "Downloading a Tails ISO using torify curl" and I said that this is a reasonable use case that shouldn't be flagged as DDoS or malicious activity.

If this was not satisfactory for you, please wait for the technical proposal and let's discuss more details when the actual technical post is out and if it doesn't fit your requirements.

> The great benefit of this …

> The great benefit of this [Proof of Work] approach is that the proposed PoW system is dynamic and automatically adapts its difficulty based on the volume of malicious activity that is hitting the service. Hence, when there is a big attack wave the difficulty increases, but it also scales down automatically when the wave has passed.

That sounds acceptable to me, and also sounds similar to what long time Tor users know from experience can make Tor slow at times but so far we have always recovered from such large scale attacks.

I don't understand why you would even be considering tokens since PoW sounds far superior. You said tokens fit Tor like a glove but I have no idea why anyone would think that. It seems to me that quite the opposite is true, but for all I know I simply do not yet know enough about these so-called anonymous tokens. From what little you said, I picture something like a cookie which can be exploited to deanonymize us or track our websurfing. Which would be bad at best and fatal at worst. The grim fate of Navalny (no angel to be sure) once again underlines the gravity of the current threat environment confronting political dissidents all over the globe.

Hello there, thanks for the…

Hello there,

thanks for the comment.

it's fair to say that DoS is not a solved problem on the Internet and hence no single solution (e.g. tokens, PoW, etc.) can totally defend against it. Hence, while PoW can defend against certain adversary classes and fit certain user models (see proposal), the tokens can defend against another set of DoS adversary classes. Both solutions can work together. Furthermore, tokens have a bunch of other use cases that can benefit Tor which I only mentioned briefly in this blog post.

And no, we are not planning to make tokens a technology like a tracking cookie. (See how blind signatures work for example).

> Tokens can also be used to…

> Tokens can also be used to register human-memorable names for onion services

I am aware that some users greatly desire this but I think its a bad idea. Much better is to get in the habit of storing long onion addresses on an encrypted USB stick. In future there may be dedicated hw devices which make this kind of safe storage and retrieval easier, which not only exist but which are widely available in all those drugstores which are covertly using facial id to track customers within 100 m of the store.

Hm. I don't agree with this…

Hello and thanks for comment.

I don't agree with this.

Storing long onion addresses on an encrypted USB disk might work for you, but it definitely doesn't work for most of humanity. If we ever hope to bring Tor out of the niche and into the hands of more people we need to fix the bad UX elements it has. Huge random domains are unusable for most people and make onions very hard to use by non-techies or extremely motivated users.

I probably sound like a…

I probably sound like a broken record by now, but: https://blog.torproject.org/cooking-onions-names-your-onions

Developing a secure, decentralized, and globally unique name system is a *very* difficult problem. Many have tried, and so far, all have more-or-less failed. Tor Project, please don't try to roll your own here. Not only is it error-prone, but I think it's beyond the scope of Tor Project. I truly think the pluggable approach described in that post is the right way to go.

And before someone says I2P: no, .i2p names are not globally unique.

That being said, yes, I also agree it would be nice to have, and would make onion services dramatically more useful.

Per-user nicknames are far…

Per-user nicknames are far easier and safer than a global naming system.

Even if you build a first-come, first-served global system, say using a block chain, you still have massive problems with impersonation, because names also have meaning outside of your system. I may be the first user who claims "firstnationalbank.onion", but that doesn't mean that I'm the entity that users think of as "First National Bank"... and the very users you're worried about will be confused about that.

So then you end up needing ways to curate the namespace, which makes building your own version of Namecoin or ENS look like a walk in the park. You don't want to go there.

On the other hand, you can feasibly solve much of the usability problem just by letting the user choose the name they'll use for a given site after the first use. Maybe the site could recommend the name, or even better maybe somebody referring the user to the site could recommend the name... but make the name local to that user and ultimately that user's responsibility, and make sure the user understands that.

Some possibilities:

... and if you really must have a global naming system, please don't go and invent a new one because the existing ones don't have some "nice to have" feature, or are a little bit tricky to integrate, and least of all because you "don't want to require users to interact with another system". If any existing system can support the essential features at all, please just adopt it, and spend your energy putting user interface sugar around it to ease any pain points.

There's way, way too much fragmentation in this stuff. Every time you introduce a new naming system, you expend extra resources, reduce economies of scale, reduce any scale-based security gains (which these systems usually have)... and introduce an opportunity for the owner of a given name in one system not to be the same as the owner of the same name in another system.

Hello, very recently we…

Hello, very recently we actually did start a per-user nicknames project using HTTPS-Everywhere: https://twitter.com/torproject/status/1267847556886740992

I think this is a nice way to do short-term progress on this problem, but IMO per-user names are not the way to go in the future. People are used to global names, and having non-global names is just too much confusion for common users

That said, our work with HTTPS-Everywhere makes the first steps to getting there. It still doesn't support custom rulesets, because of all the unanswered security issues (i.e. phishing and info leaks) that come with non-global names, but with a bit more work we can get there.

Since you seem to have a good grasp of the situation, you could help by doing some mockups of how the UX could look like, or of security analysis?

You can find more details here: https://trac.torproject.org/projects/tor/ticket/28005

and also here https://blog.torproject.org/cooking-onions-names-your-onions

Cheers!

I don't think what you have…

I don't think what you have with HTTP Everywhere is at all the same thing I'm suggesting. You seem to be proposing that users accept blocks of opaque, incomprehensible rewriting rules created by third parties. I can see why that would worry you.

I'm proposing that each user assigns their own names to sites, one by one, maybe with some tentative suggestions from site operators.

I really want to object to this:

If you have global names, it's true that "common users" won't feel confused. They'll think they're not confused. That doesn't mean that they won't actually be confused.

In fact, they'll be badly confused about what it really means for a given name to exist in your name space. If you offer global names, "common users" will be at much greater risk of truly dangerous confusion. They're already confused that way by regular DNS names, and that confusion is constantly used against them.

In particular, the phishing risk would be enormously worse with any Tor-adoptable system of global names than it could ever possibly be with local nicknames. Claiming "firstnationalbank.onion" would be an absolutely fantastic way to impersonate First National Bank, with nobody able to shut you down and a very small risk of being caught.

In the sort of global naming system that Tor would have to adopt, phishing and general impersonation are far, far worse problems than in traditional DNS. When a scammer registers "firstnationalbank" in any traditional DNS TLD, there's a registrar who has at least some information about who registered it, and, more importantly, who can take it down.

Obviously, you could build a system that let some "trusted party" take down Tor names, or even approve them before they were created. The results would be worse than widespread phishing. That party would become the arbiter of who was a legitimate user of the system, and would be under massive pressure to start taking things down right and left.

It would start with impersonation (... and how is the arbiter actually going to know when there's impersonation?). It would go from there to drugs and child porn. Then it'd be piracy. Once those precedents had been set, it would go on to pressure to shut down (or at least de-name) anybody who violated any bogus law in the most dictatorial hell-hole on the planet, or who ticked off anybody with any power. Any refusal to take down a name would be treated as an endorsement of the service in question.

Neither the Tor project nor anybody else is in a position to deal with that kind of pressure. The whole point of having hidden services is to allow publishing things that powerful people don't want published. If a naming system doesn't support that, it's not fit for purpose.

Based on many years of thinking about the naming problem, and on having seen probably dozens of attempts to solve it, I believe that it's impossible to create a global naming system that's fit for Tor, period. Not in any way whatsoever, and regardless of how nice it would be to have one. I'm not the first to reach that conclusion, either.

I think unwillingness to accept that sort of limitation is a symptom of a systemic problem in the Tor Project's thinking. You're so concerned with "ease of use" that you forget about users' needs to actually be protected, and to understand how they are and aren't protected. "Ease of use" starts to amount to "making it easy to put yourself at risk". If a person doesn't have to think about what a name means, I guess that's easy... but it's easy in the same way that "it's always easy to die".

One of these days, somebody is in fact going to die from "ease of use"... if somebody hasn't already.

As for local names, your "mockup" of the user's introduction to them is something like a dialog box that says:

The blocking option gives them a way to record it when they notice an obvious impersonation attempt, and protect themselves against it if they run across the same thing again.

You provide a separate page they can go to to see, edit, and revoke the nicknames they've created.

Obviously you don't let nicknames collide with real key-based names. You can do that just by checking length.

If you're storing the nicknames and blocked site list in the network, there are a bunch of other issues to work out, but they're not really nickname-specific.

You mentioned information leaks. The answer to that is never to let the nicknames appear on the wire or in HTML, form data, or whatever. They should exist only in the URL bar, and maybe in link hover text. They should always be translated to regular key-based .onion addresses before they're fed into the main machinery of the browser, copied to the clipboard, or whatever. You also shouldn't accept them from the rest of the browser machinery. Sites should not be able to use them in links, since that might send different users to different targets.

As for how the site suggests a name, it seems as though you already have a precedent for custom HTTP headers with Onion-Location. You could add an "Onion-Preferred-Nickname" header. When people reached sites by clicking on links, you could also suggest names based on the link text... again making it clear that you had no other evidence that that text corresponded to the actual site.

Actually, you might be able to be a little more free about encouraging users to accept site-suggested nicknames in the specific case where they'd just been redirected to the .onion from a regular DNS domain that had been validated with X.509 and matched the suggested nickname. But honestly that's not a very interesting or common case, and X.509 is not as safe as people think it is.

Is Tor Project still working…

Is Tor Project still working with Tails Project? Won't some of the changes proposed above break Tails?

Hey. What do you have in…

Hey. What do you have in mind?

Proof of work may be…

Proof of work may be pointless in the long run as most ddos's arent run off some server, they are part of botnets, and slowing down granpas infected computer that he uses an hour a night isnt going to be some huge risk to the botnet owners.

I hate to say it but captchas may be the only solution, and as others have said even the ones that can beat OCR algorithms can be gamed by hiring 3rd worlders to solve them manually for pennies, an easily affordable route for a determined attacker of any size. What do 3rd worlders all have in common? They are all from non english speaking countries. To hire enough people from english speaking countries would be prohibitively expensive and difficult as participating in attacking a online service is illegal in most english speaking countries. It follows the solution to the captcha problem is not a technological one, but a linguistic one, make the captcha require a profiency in english, and for this purpose over 90% of onion users are covered.

> slowing down granpas…

> slowing down granpas infected computer that he uses an hour a night isnt going to be some huge risk to the botnet owners.

Even worse, on the basis of personal observation, a large fraction of grandpas are unable or unwilling to learn how to update their software, and are even continuing to use very outdated and utterly unpatched operating systems. No doubt the botnet owners love to exploit this.

> most ddos's arent run off some server

I am not sure that is true. Someone like Brian Krebs could speak more authoritatively about this, but my understanding is that many cloud services are so badly secured (some cloud providers do provide resources for securing a server but the customer fails to use them correctly, and some cloud providers appear not even to pay lip service to security) that these are increasingly used to host cyberwar activities without the knowledge or cooperation of the "owner".

> It follows the solution to the captcha problem is not a technological one, but a linguistic one, make the captcha require a profiency in english,

Maybe I misunderstand what you are proposing here. Are you arguing that TP should try to restrict Tor users to persons who are native speakers of English, in order to hamper an attacker such as GRU? If so, isn't FBI an even greater threat?

It seems to me that proficiency in English sufficient to solve linguistic-based English-language CAPTCHAs is fairly widespread in poor nations where bad actors might hire fifty-centers. For example in the Phillipines, where English is widely spoken as a result of a long-running presence of the US Marine Corps, which ran a horrific counter insurgency program featuring among other torture innovations "the water treatment". (At the dawn of the 20th century, a widely read but now extinct US magazine even featured on its cover a horrifying illustration of a Filipino insurgent being waterboarded by two marines. And some USMC service members played the role of whistleblowers. And the clownish Governor General of the Phillipines, later a US President and US Supreme Court Chief Justice, slipped up during his testimony to Congress and admitted that he knew about the widespread practice of waterboarding, adding without being prompted that he understood that the procedure proved fatal in about one in four attempts.)

Clearly TP, far from trying to limit the Tor user base, needs to expand it as widely as possible. Indeed, many of the people who most need Tor to evade censorship and to get word of government corruption and abuses to "Western" journalists who are increasingly prone to publish stories detailing genocide, torture and corruption, are citizens of "third world" countries with long histories of colonial rule followed by corrupt and incompetent authoritarian post-independence governments. Or are citizens of former-Soviet republics such as Belarus, or former Soviet client states such as Egypt or Syria.

> Proof of work may be…

> Proof of work may be pointless

Proof of work provides at least two different qualities here. Not just expense (what you're referencing, I think), but also time. No matter the size or computing power of the botnet, if you make each rendezvous handshake take twice as long for the client, you will cut the attack rate in half.

That means the attacker would need a botnet twice the size in order to perform the same attack. It doesn't provide perfect protection, but implementing a small PoW is

- very simple in design, compared to a token-based approach

- probably not very difficult to implement in Tor

- completely transparent to users (except for a small delay, only when the onion service is under attack)

- probably could be made completely transparent to onion service operators too

I posted the comment to…

I posted the comment to which you are replying, but I agree with what you just said.

I also feel that PoW seems less likely to unintentionally exclude legit users of onions than tokens but you are right that I missed the point that at the cost of doubling connection times (probably acceptable) the cost to attackers doubles. I am not sure that would be an obstacle for state sponsored attackers e.g. Belarus, Syria, GOP, however.

> I am not sure that would…

> I am not sure that would be an obstacle for state sponsored attackers e.g. Belarus, Syria, GOP, however.

Very true, and very possible. It'll never be a perfect solution. That being said... we need to start somewhere. It won't be perfect, but if we can start by making attacks even 1% harder, it's a start. That's why I think PoW is a solution at least for now, because it provides some level of protection without being extremely difficult to implement.

Also, a lot of commenters keep saying stuff like "but X government could afford an attack that overpowers this defense and that defense." (I'm not singling you out - a lot of people said it.) First of all, a good mitigation mechanism will scale along with the attack. Second, keep in mind, at some point along the curve, if they could spend *that* much on a DoS, then they can afford much more effective attacks too. For example a massive global Sybil attack, or something like that. At which point why bother with DoS at all? So, in practice there is a price ceiling to what we're trying to protect against here; beyond that point DoS should be the least of our worries.

A little off-topic, but can…

A little off-topic, but can The Tor Project please shift all related URLs to v3 onion resources soon and list them here: onion.torproject.org (as v2 onions will be defunct in the near term)

Yep. That should happen soon!

Yep. That should happen soon!

>For example, we want to be…

A centralized token issuing server from a third party sounds like a privacy nightmare. .onion services should always be able to remain independent and not rely on any external services.

Hello there, this token…

Hello there,

this token issuing server would only be used in the case of DoS. Also, this would not be the only way to acquire tokens; it would be the Minimum Viable Product to get this concept launched. Onion services would also be able to give out tokens to their users based on their own criteria, and we can also come up with decentralized token issuing servers.

I like the anonymous token…

I like the anonymous token idea. It would be interesting if there were some system in place in which a user submits a PGP key to a keyserver who's purpose is token distribution.

Some API requests to this keyserver woud distribute/burn tokens for a user (public key), and websites could utilize them in their workflow during certain scenarios (positive contributions, etc.) to increase a users reputation (token number). A user's token balance could be queried anonymously using zero-knowledge-proofs (ex. Does user have > N tokens?) in order to determine if they are "trustworthy" enough to use their service.

I would love to see bitcoin…

I would love to see bitcoin lightning network integrated, so you could pay to the site owner on each request, but I think this is too complicated or maybe even not possible for privacy, security or even software architectural reasons.